Artificial intelligence (AI) is increasingly being used in safety-critical product development, delivering benefits to users across a wide range of industry areas such as manufacturing, healthcare, transport, and the financial and wider digital sectors.

It’s obviously important to ensure that AI and Functional Safety systems are safe, secure and reliable for users and manufacturers. People’s lives and company reputations are otherwise at risk. However, safety-related guidance for senior managers is quite limited. This naturally poses a risk when it comes to ensuring systems respond correctly to inputs.

The purpose of our flyer on this topic is to raise awareness of key factors and to provide summary information for these managers to support their decision making in this area.

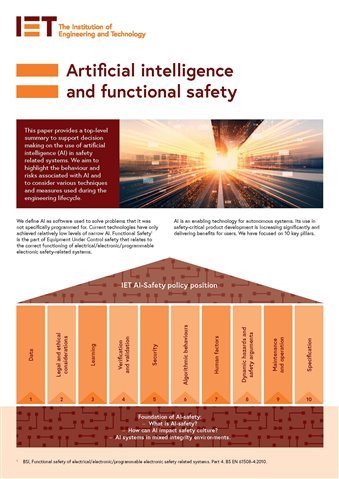

The flyer focuses on 10 key ‘pillars’ that highlight AI-related considerations and techniques, and measures employed during the engineering lifecycle. It also addresses the fundamental differences between traditional and AI software.

We aim to supplement this initial flyer with a more in-depth paper and further outputs in the coming months. We’d welcome your comments and ideas, both on the paper and on ways to reach audiences that can benefit from our work.

Download our flyer here for free: Artificial intelligence and functional safety