Artificial Intelligence (AI) is a revolutionary technology that has captured the imagination of scientists, innovators, and the public alike. However, when it comes to determining whether AI is inherently good or bad, the answer is neither. Last month, Dr Rachel Craddock, mentioned in her blog post, that the true nature of AI lies in the choices humans make regarding its development and application. In this blog post, we explore the multifaceted nature of AI and the importance of responsible AI deployment for the betterment of society.

An example of an AI-generated image made using Midjourney, a popular generative artificial intelligence program (Image source: carbongpt.ai)

A Limit to AI Advancement?

As AI continues to progress, a pertinent question arises: will there be a limit to its growth, like the limits placed on nuclear power? While it is challenging to predict the future of AI with certainty, it is essential to acknowledge that different generations will have varying perspectives on this matter. Younger generations often argue that attempting to control AI is futile, as the open-source community actively publishes AI tools, which allows for circumventing any imposed restrictions. Conversely, proponents of tighter regulations argue that more rules will result in accelerated AI development and advancement. Striking a balance between freedom and control becomes crucial to ensuring AI's positive impact.

Civitai is an example of a platform fueled entirely by community-generated models. The lack of diversity in the models uploaded is painfully obvious. (Image source: civitai.com)

The Dark Side of AI

The potential for misuse and exploitation of AI's capabilities raises concerns about cybersecurity, privacy breaches, and the proliferation of unethical practices. The ease of using AI to generate content, including text, images, and videos, poses significant risks. Platforms like CivitAI*, which enable users to share generative models, exemplify the potential for misuse. Without proper oversight, such platforms could become breeding grounds for the dissemination of objectionable, pornographic, fake content, or even propaganda created by AI. This underscores the importance of implementing stringent measures to prevent the abuse of AI technologies.

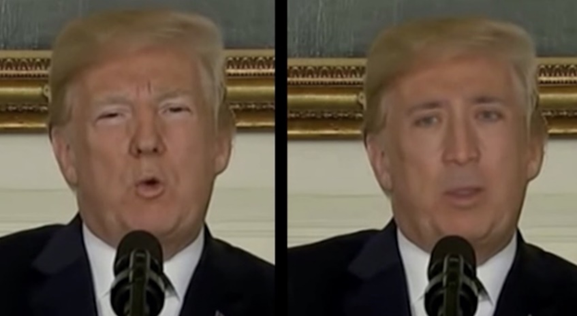

While deepfakes are not a novel concept, they continue to raise significant concerns. (Image source: https://www.inteldig.com/2019/07/adobe-entrena-inteligencia-artificial-para-detectar-deepfakes/)

Fighting Fire with Fire

In combating objectionable AI-generated content, the proverb "Fighting fire with fire" rings true, using Artificial Intelligence (AI) as a countermeasure. By harnessing “Narrow AI”, as Dr Rachel puts it in last month's post, we can effectively identify and address the consequences of objectionable content. Conventional manual moderation approaches fall short in the face of this challenge, highlighting the importance of AI in tackling a problem of this scale and complexity. Through meticulous training of AI models with annotated datasets, we can refine content detection capabilities, swiftly discern explicit material, deep fakes, and take necessary moderation actions. However, it is crucial to address ethics, privacy concerns, and maintain human oversight to ensure a responsible approach that fosters a safer online environment.

Democratizing AI, a potential solution?

When AI becomes accessible to everyone, it is no longer a “superpower”. By democratizing AI, we can foster a level playing field and empower individuals and communities to leverage AI's potential for their own betterment. Additionally, it becomes crucial to educate and equip people with the necessary knowledge and skills to handle AI safely and ethically. (More about democratizing AI can be found on my other video blog post here)

In a nutshell…

While AI brings significant advancements and possibilities, it is not immune to misuse. The dark side of AI encompasses concerns regarding the generation and dissemination of objectionable content, cybersecurity threats, and privacy breaches. To mitigate these risks, it is crucial to establish strong safeguards, including regulations, ethical guidelines, and transparency practices. By fostering a responsible AI ecosystem, we can harness the transformative power of AI while safeguarding against its potential for harm.

This is the second in a series of posts from the IET AI Technical Network committee, examining AI and the concerns around it. Look out for next month’s blog in which my fellow AI TN committee member, Ms. Kirsten McCormick will be continuing this conversation.

What do you think about the democratization of AI technology? Should it continue, or should it be limited?

*The author acknowledges CivitAI's content moderation efforts, which enable users to report questionable models. The mention of CivitAI is solely used as an illustration of platforms that carry risks, as there is a possibility for malicious users to exploit such platforms to distribute potentially harmful content.

-

AMK

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Comment-

AMK

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Children