With Artificial Intelligence (AI) being a hot topic, there have been many debates over safety and security both developing and using this type of technology. This blog focuses on the implementation of control, the EU’s Artificial Intelligence Act (AIA) [1], and how this could impact innovation.

As previously discussed in this blog series, AI is a tool that can be used by many people to solve or assist in many different problem spaces and industries. Though the tool itself is neither good or bad, it is key to have frameworks or acts in place to ensure that the development of these systems are ethically appropriate, safe, secure and comply with existing laws on fundamental rights.

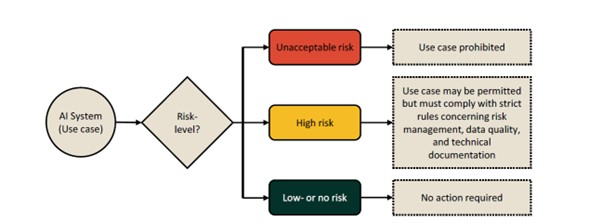

Though there have been many frameworks to ensure that regulations are in place, a team of AI experts were brought together to write the AIA. The aim was to mitigate the risk of AI failures and lack of trust by introducing a risk-based approach to regulating AI systems. The AIA consists of rules on the implementation of AI which includes a list of what is considered high risk systems that need to conform to specific rules, as can be seen in Figure 1.

Figure 1- Risk categories for AI use cases under the AIA [2]

The use cases are defined when developing an AI system, which includes who the project stakeholders are, what the problem is that the AI system should help solve, and specifying how it will do this. There should then be a risk analysis of the AI use case from at least three perspectives: operational risk of failure, security risk of interference, and legal risks arising from failure. This shall indicate the risk level associated with the system.

In these high risk systems, an assessment must be conducted by using the Conformity Assessment Procedure for AI Systems (capAI) [3]. CapAI was created by University of Oxford and provides an independent, comparable and quantifiable assessment built of the rules set out in the AI act. It is defined as a procedure to implement ethics-based auditing. Although only high risk systems must use this assessment, it may be beneficial to follow this protocol even with low-risk systems to ensure ethical behaviour.

Aside from the process of assessing AI, the AIA also specifies prohibited AI practises which are banned outright. These include practises such as; the use of real-time biometric identification systems in publically accessible spaces for law enforcement, and the use of AI for subliminal techniques to distort behaviour.

Though control is important in minimising harmful effects that AI could bring, if controls are too strict, it could negatively impact innovation. AI holds great promise to support and advance humanity in many progressive ways. A great example of how positive an effect it can be, 20% more breast cancers from mammograms were detected after using AI than traditional screening by radiologists. This shows the positive impacts that this technology can bring, but if there are too many restrictions, then the development and deployment of these systems will be delayed, if not stopped. So overall a balance is needed to ensure that we can collectively reap the benefits of AI without causing any detriment to fundamental rights and values.

This is the third in a series of posts from the IET AI Technical Network committee, examining AI and the concerns around it. Look out for and sign up to this month’s webinar AI in Conflicting Environments.

[1] European Commission, The Artificial Intelligence Act. 2021.

[2] Mökander, J., et al., Conformity assessments and post-market monitoring: a guide to the role of auditing in the proposed European AI regulation. Minds and Machines, 2021: p. 1-27.

[3] Floridi, Luciano, et al. "CapAI-A procedure for conducting conformity assessment of AI systems in line with the EU artificial intelligence act." Available at SSRN 4064091 (2022).